Are Your Programmatic Pages Safe After Google’s Latest Spam Report Update?

Google is enlisting the help of SEOs and webmasters to report spammy web pages, in what the community describes as "negative SEO". Its latest spam report seems to target poor programmatically-built pages. I discuss the differences between good and bad programmatic SEO campaigns.

You could argue that Google’s greatest challenge to date is locating and “nuking” spam from its search results.

But with how easy it is to create a site and start publishing from day one (you could post hundreds of articles with Generative AI today), they’ve enlisted the help of SEOs and webmasters to identify and report spammy content.

And while this isn’t the first time Google has led with user-generated reporting, this does seem like its most aggressive campaign. Google dubs it the ‘Search Quality User report’.

What is the Search Quality User report?

On June 14th, Google announced the relaunch of its Search Quality User report to flag pages that may be construed as spammy, deceptive, or low-quality.

We're happy to introduce you to our new report for spammy, deceptive or low quality webpages!

— Google Search Central (@googlesearchc) June 14, 2023

If you want to learn a bit more, check out https://t.co/ZMIBT7Rqyf for more details. pic.twitter.com/PoqUeox8gS

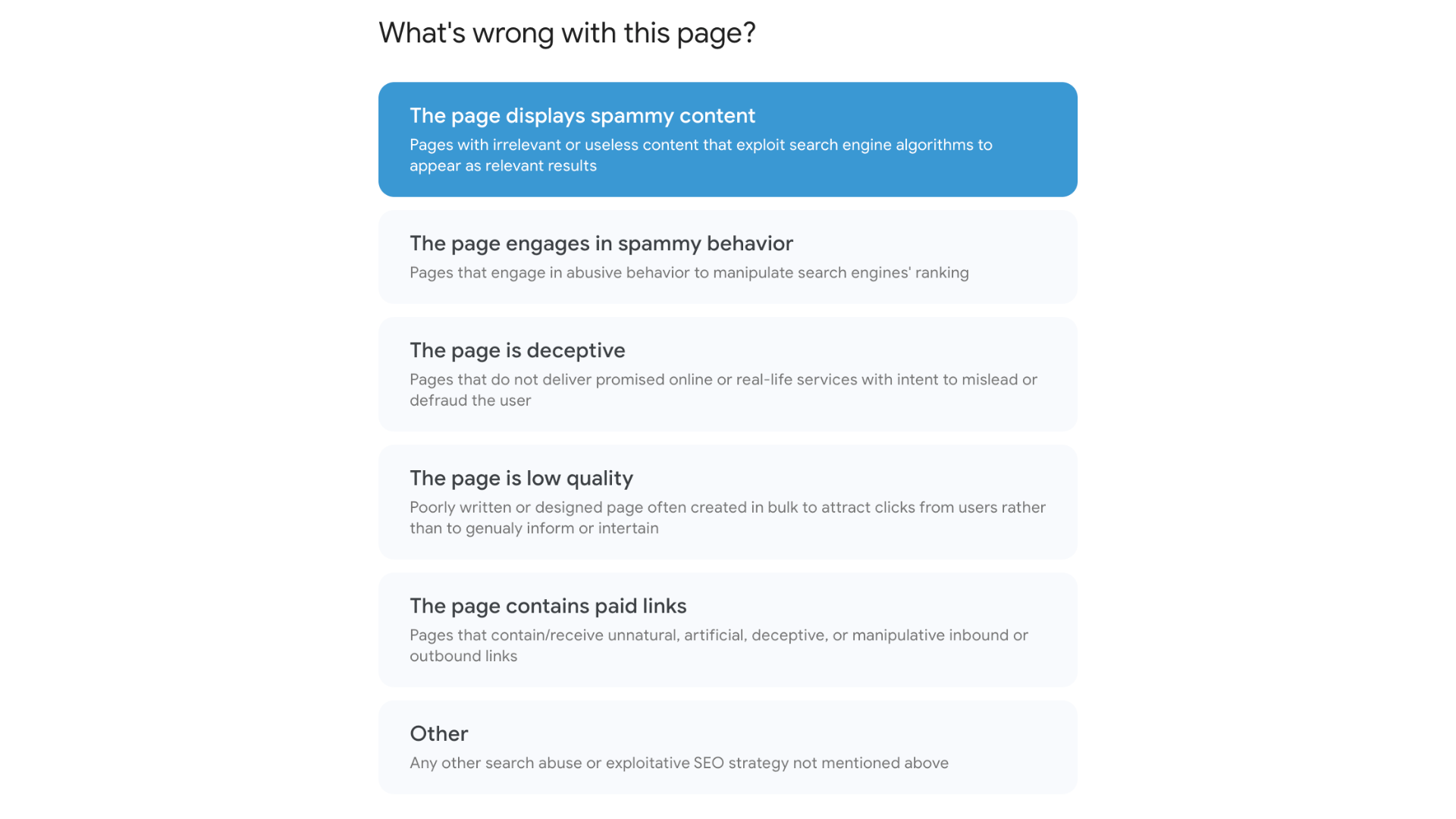

But how exactly does content fit these buckets? Google defines it as:

- Irrelevant content with the sole purpose of gaming search engine algorithms.

- Content that engages in spammy behavior, such as misleading search intent or even attempting to defraud visitors.

- Content that appears deceptive or low quality as a way to attract clicks without providing genuine or new information.

- Content containing paid backlinks, which is a longstanding black hat SEO technique. Although, any honest SEO will tell you that Google still struggles with identifying paid link placements.

For those interested, Google provides a breakdown of the different ways your content could get flagged as spam. Now onto how the report works.

How does the Search Quality User report work?

The report is straightforward, requires two stages, and feels similar to filling out a Google Form.

Stage 1: Select a category for the spammy page

Enter the URL of a page that you feel isn’t up to Google’s search result standards. After that, you’ll need to select one of the six categories listed below.

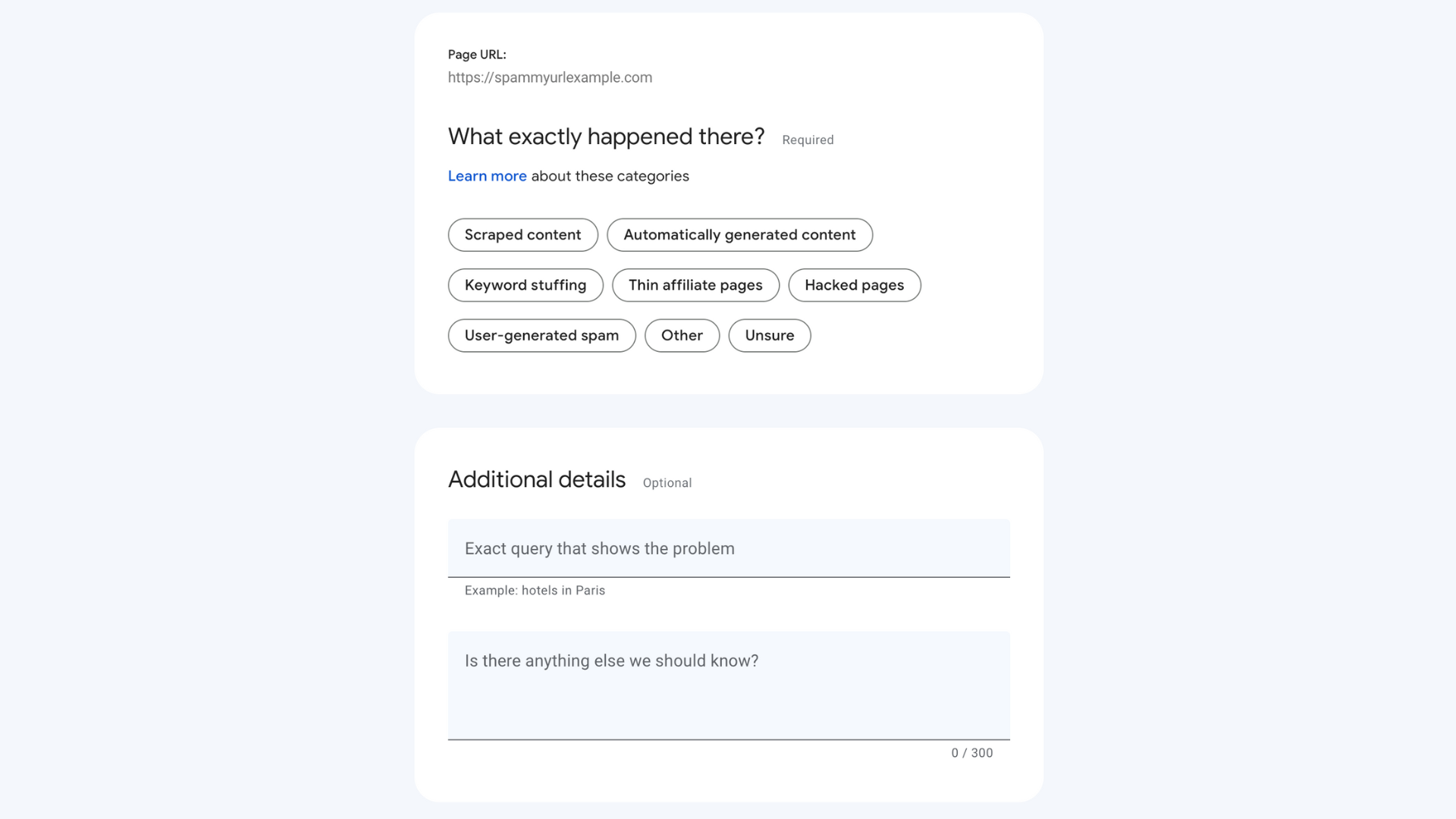

Stage 2: Explain why you believe the page is spammy

Different tags that give context to your report may show up depending on which category you selected. For example, if you selected “this page displays spammy content”, you’ll have to specify your concerns by applying tags.

You’ll also have to provide the exact query you saw this page show up for, and any additional details about the page that give you concerns.

If you need help wording your feedback or seeing if it’s valid enough for a report, Google came up with this comprehensive list of violations. Your report should take a few days to be processed.

What does this mean for your programmatic pages?

We’ve known for a while about keyword stuffing, paid links, content farms, and other black hat SEO techniques mentioned in Google’s Search violations.

But this time around, it feels like programmatic pages are receiving more attention. A few notable mentions in the Search Quality User report:

- Scraped content: The page displays scraped and assembled content from external sources without adding substantial value.

- Automatically-generated content: The page displays programmatically generated content that often makes no sense to human readers.

- Low quality: Poorly written or designed pages often created in bulk to attract clicks from users rather than to genuinely inform or entertain.

Quick recap - What are programmatic pages?

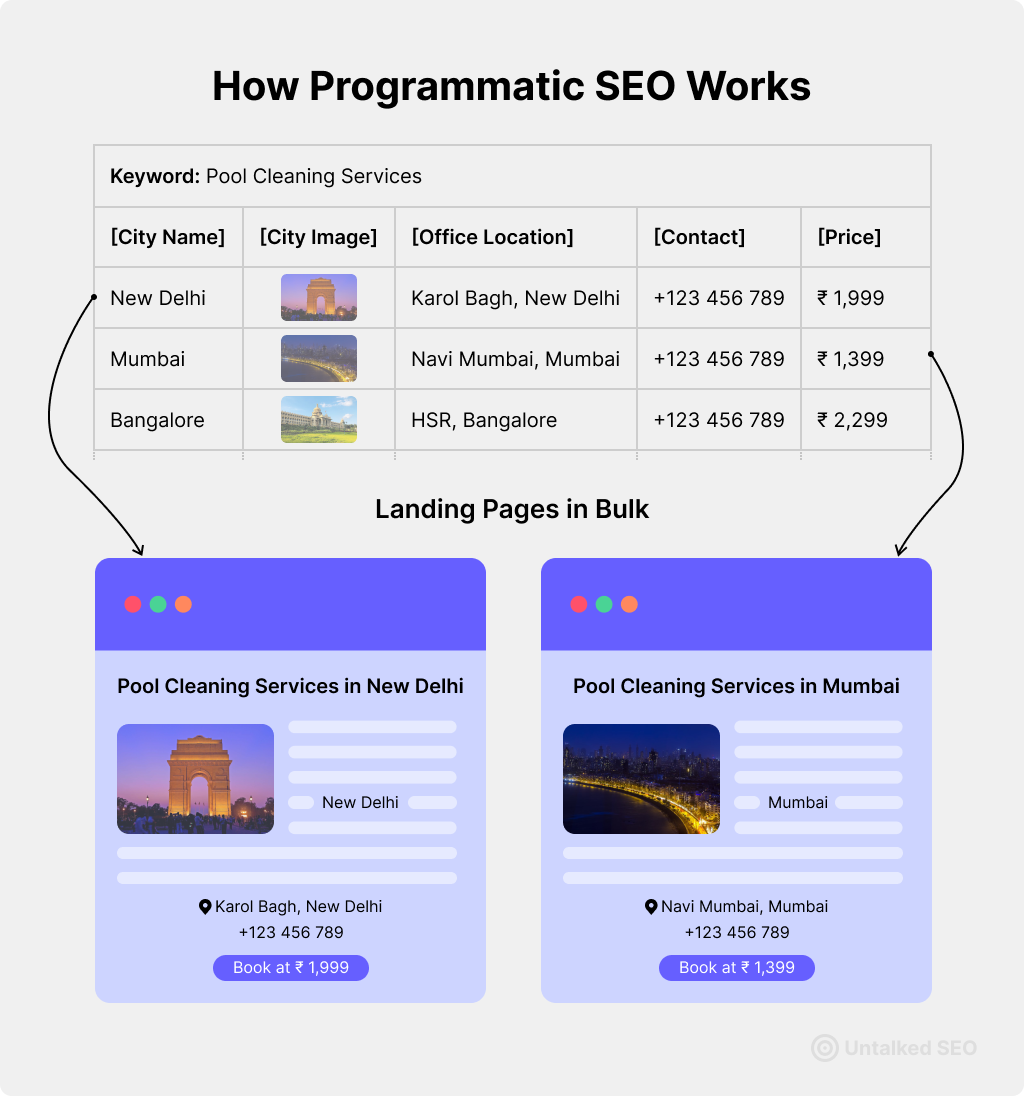

A programmatic page is created automatically (or programmatically) using software, based on certain rules or algorithms. These rules typically involve the use of structured data to produce the content and structure of the page.

In the context of SEO, a programmatic page aims to create unique, valuable, and keyword-optimized content at scale. The goal is to attract organic search traffic by satisfying the user's intent and meeting search engine guidelines.

For example, an e-commerce site might have thousands of product pages. Creating and optimizing each of these manually would be a monumental task that could take months. Instead, the site could use programmatic SEO to automatically create each product page using data from their product database.

The pages would be programmatically populated with product details, images, reviews, related products, etc., and each element would be structured and optimized to be unique for all pages.

What qualities should high-performing programmatic pages have?

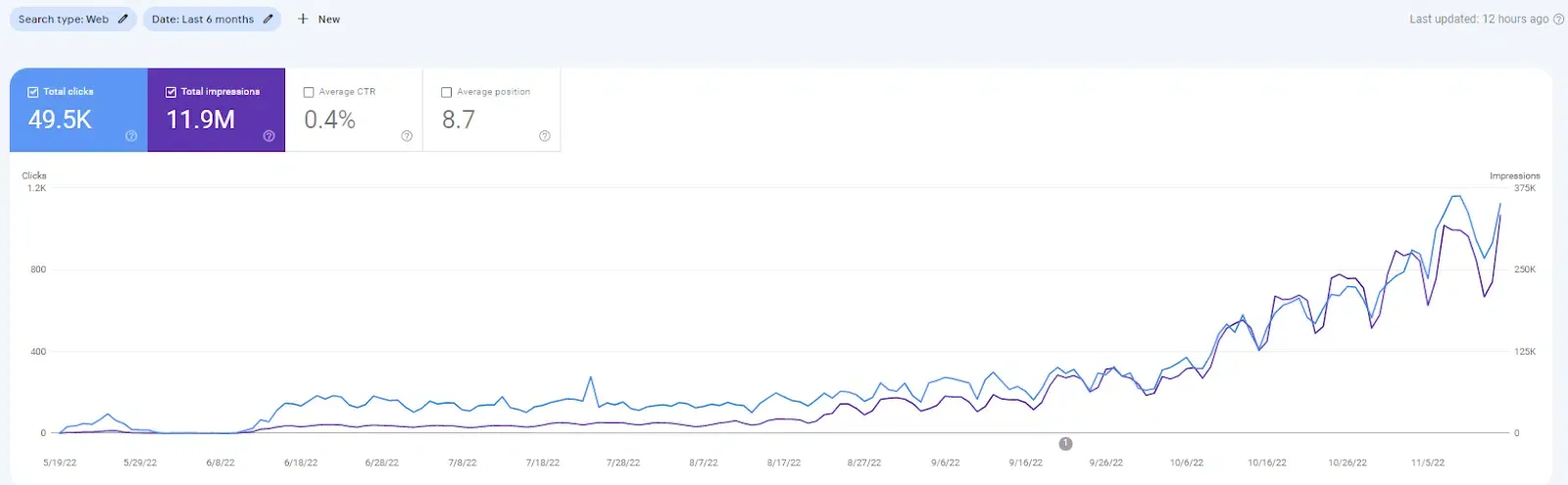

When applied correctly, programmatic campaigns are some of the most successful SEO experiments a brand can conduct. You could build as little as a few hundred pages to as many as millions of pages over a short period of time. If the content is unique and if you’re targeting the right keywords, you should see results steadily pick up.

To avoid getting flagged as spam or low quality, it's important to follow some basic principles for your programmatic pages:

- Unique, valuable content: If your pages are auto-generated with little variation or added value, it could be seen as spam. Remember, scraping content from other web pages is recommended against. Use your first-party data to personalize and tailor the content to the user's search intent.

- Keyword usage: While it's essential to include relevant keywords, avoid keyword stuffing. The content should be reader-friendly first. The keywords should fit into the content seamlessly without being a distraction.

- Avoid duplicate content: Make sure that each page is distinct and doesn't duplicate content from other pages on your site. Some brands duplicate content as a way to avoid being flagged as thin content, however, this could backfire. In fact, you run the risk of none of your programmatic pages being indexed.

- Technical SEO: Ensure that all your programmatically generated pages follow SEO best practices. This includes having a clean URL structure, correct usage of meta tags, proper header hierarchy, and fast loading times.

- UX: Pay attention to the user experience (UX). The pages should be easy to navigate, visually appealing, and mobile-friendly.

- Regular Updates: Keeping content fresh and up-to-date is another way to signal to Google that your content is real, relevant, and not spammy. Programmatic content allows you to update pages at scale when necessary.

Real-life example: I worked on the content team at G2.com while the technical SEO team started rolling out programmatic pages. Using first-party data (such as software ratings and customer reviews), targeting the right terms, and adhering to SEO best practices, the campaign was a success. It’s why you’ll see G2 rank in the top 10 for nearly every “best x software”, “x software alternatives”, or “top x platforms” keyword variation today.

When do programmatic pages become spam?

In my SEO consulting, programmatic pages have been all the hype lately. I get it, with tighter budgets and resources, brands are looking to hack traffic growth as quickly and efficiently as possible. Naturally, programmatic SEO becomes a shiny object.

But a programmatic page is not to be confused with low-quality, auto-generated content that scrapes third-party data from external sources and props it up as original content.

While it can be tempting to run this experiment, you could do more harm to your site than good. This could lead to your pages not being indexed, a decline in website traffic (that can be difficult to recover), and wasted SEO and technical resources. You also may hurt your website’s reputation as a trusted source of content.

With the Search Quality User report attempting to streamline feedback against spammy web pages, it’s not worth the risk of auto-generating bad content!

Why there’s general concern with this tool

Like most news that comes out of Google Search Central, this revamped tool was met with harsh criticism.

Many SEOs on the Twitter announcement claimed that this tool will be abused to hurt rankings of competitive sites. In a hypothetical scenario, if I were a small accounting SaaS and saw my competitor out-ranking me for several key terms, couldn’t I just flag all their pages as spam and derank them? SEOs are describing this tactic as “negative SEO”.

While it still remains to be seen how this tool will be abused by black hat SEOs (because let's face it, it absolutely will be), I feel confident that Google will provide guardrails against spam submissions. Google already stated it won't look at duplicate submissions from the same accounts.

It's also worth nothing that Google’s AI spam detection system, SpamBrain, is sophisticated enough to identify a ~99% of spam content today.

In fact, SpamBrain detected 5 times more spam sites in 2022 compared to 2021 and 200 times compared to when it first launched. It’s why we rarely see spam sites rank in the top dozen or so search results.

And the scenario I described above? I have my doubts that a negative SEO campaign would be that effective. But therein lies a new experiment waiting to happen – who is bold enough to test a negative SEO campaign against their competitors and publish their results? Hypotheticals make good social buzz, but truth lies in the data.